Part of the problem is that parents like me jump right into the multiplayer mode competing against our kids while simultaneously trying to learn the game mechanics. Most of these games have a single-player "campaign" mode. The campaign is designed both to be consistently entertaining and to step-by-step make you better at playing the game. The two primary rewards of the campaign are progressive discovery of the story (narrative) and progressive mastery of new skills.

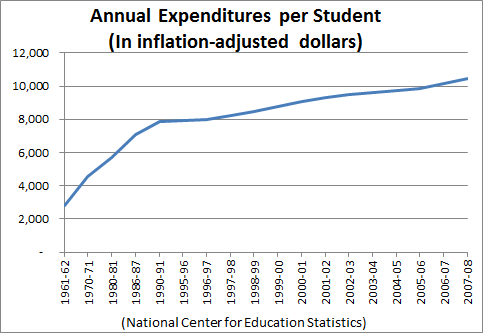

The Flow Channel in Game Design

In his book, The Art of Game Design, Jesse Schell describes "flow", a concept he adopted from psychologist Mihalyi Csikszentmihalyi. An individual is in a "flow state" when he or she is entirely involved in the task. "The rest of the world seems to fall away and we have no intrusive thoughts." It's a state of sustained focus and enjoyment. |

| Figure 1: Flow Channel |

|

| Figure 2: Growth Path in the Flow Channel |

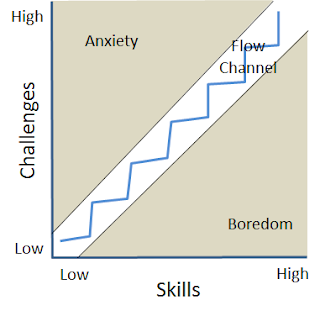

The Zone of Proximal Development

Russian psychologist Lev Vygotsky defined the Zone of Proximal Development (ZPD) as the area between the tasks that a learner can do unaided and the tasks a learner cannot do at all. So, tasks in the ZPD are those that the learner can do with assistance – with scaffolding. Vygotsky claims that all learning occurs within the ZPD. |

| Figure 3: Zone of Proximal Development (ZPD) |

There are many ways to put this into practice. For example, the Lexile Framework and other text complexity measures allow the matching of reading materials to the student's reading ability. Texts that are close to students' abilities increase confidence while more challenging texts increase skill levels. Texts that are too easy (boring) or too hard (anxiety producing) can be avoided. Mathematics is a structured subject where concepts build upon each other. Therefore, concepts in a student's ZPD are those that build on concepts the student already understands.

Integration

The Flow Channel offers a model to maximize player engagement and enjoyment. The Zone of Proximal Development is a model for optimizing learning productivity. Similarity between the two isn't surprising. Csikszentmihalyi studied and built on Vygotsky's work.One of the most important things that educators can learn from Flow is that boredom contributes just as much to frustration as anxiety does. Conventional schooling does a lot of redundant work to ensure that most students "get" each concept. The boredom that results from such redundancy means that students rarely experience Flow in their schoolwork. It's also inefficient because students spend a lot of time below their ZPD in which case they aren't learning. Staying within the Flow Channel/ZPD can ensure that effective learning occurs and simultaneously keep the student motivated and rewarded.

It brings whole new meaning to, "Go with the Flow!"