This is part 9 of a 10-part series on building high-quality assessments.

A 2015 survey of US adults indicated that 34% of those surveyed felt that standardized tests were merely fair at measuring students' achievement; 46% think that the way schools use standardized tests has gotten worse; and only 20% are confident that tests have done more good than harm. The same year, the National Education Association surveyed 1500 members (teachers) and found that 70% do not feel that their state test is "developmentally appropriate.".

In the preceding eight parts of this series I described all of the effort that goes into building and deploying a high-quality assessment. Most of these principles are implemented to some degree in the states represented by these surveys. What these opinion polls tell us is that regardless of their quality, these assessments aren't giving valuable insight to two important constituencies: parents and teachers.

The NEA article describes a hypothetical "Most Useful Standardized Test" which, among other things, would "provide feedback to students that helps them learn, and assist educators in setting learning goals." This brings up a central issue in contemporary testing. The annual testing mandated by the Every Student Succeeds Act (ESSA), is focused on school accountability. This was also true of its predecessor, No Child Left Behind (NCLB). Both acts are based on the theory of measuring school performance, reporting that performance, and incentivising better school performance. States and testing consortia also strive to facilitate better performance by reporting individual results to teachers and parents. But facilitation remains a secondary goal of large-scale standardized testing.

The frontiers in assessment I discuss here shift the focus to directly supporting student learning with accountability being a secondary goal.

- Curriculum-Embedded Assessment

- Dynamically-Generated Assessments

- Abundant Assessment

Curriculum-Embedded Assessment

The first model involves embedding assessment directly in the curriculum. Of course, nearly all curricula have embedded assessments of some sort. Math textbooks have daily exercises to apply the principles just taught. English and social studies texts include chapter-end quizzes and study questions. Online curricula intersperse the expository materials with questions, exercises, and quizzes. Some curricula even include pre-built exams. But these existing assessments lack the quality assurance and calibration of a high-quality assessment.

In a true Curriculum-Embedded Assessment, some of the items that appear in the exercises and quizzes would be developed with the same rigor as items on a high-stakes exam. They would be aligned to standards, field tested, and calibrated before appearing in the curriculum. In addition to contributing to the score on the exercise or quiz, the scores of these calibrated items would be aggregated into an overall record of the student's mastery of each skill in the standard.

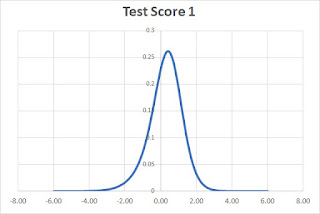

Since the exercises and quizzes would not be administered in as controlled an environment as a high-stakes exam, the scores would not individually be as reliable as in a high-stakes environment. But by accumulating many more data points, and doing so continuously through the student's learning experience, it's possible to assemble an evaluation that is as reliable or more reliable than a year-end assessment.

Curriculum-Embedded Assessment has several advantages over either a conventional achievement test or the existing exercises and quizzes:

- Student achievement relative to competency is continuously updated. This can offer much better guidance to students, educators, and parents than existing programs.

- Student progress and growth can be continuously measured across weeks and months, not just years.

- Performance relative to each competency can be reliably reported. This information can be used to support personalized learning.

- Data from calibrated items can be correlated to data from the rest of the items on the exercise or quiz. Over time, these data can be used to calibrate and align the other items, thereby growing the pool of reliable and calibrated assessment items.

- As Curriculum-Embedded Assessment is proven to offer data as reliable as year-end standardized tests, the standardized tests can be eliminated or reduced in frequency.

Dynamically-Generated Assessments

As described in my post on test blueprints, high-quality assessments begin with a bank of reviewed, field-tested, and calibrated items. Then, a test producer selects from that bank a set of items that match the blueprint of skills to be measured. For Computer-Adaptive Tests, the test is presented to a simulated set of students to determine how well it can measure student skill in the expected range.

In order to provide more frequent and fine-grained measures of student skills, educators prefer shorter interim tests to be used more frequently during the school year. Due to demand from districts and states, the Smarter Balanced Assessment Consortium will more than double the number of interim tests it offers over the next two years. Most of the new tests will be focused on just one or two targets (competencies) and have four to six questions. They will be short enough to be given in a few minutes at the beginning or end of a class period.

But what if you could generate custom tests on-demand to meet specific needs of a student or set of students? A teacher would design a simple blueprint — the skills to be measured and the degree of confidence required on each. Then the system could automatically generate the assessment, the scoring key, and the achievement levels based on the items in the bank and their associated calibration data.

Dynamically-generated assessments like these could target needs specific to a student, cluster of students, or class. With a sufficiently rich item bank, multiple assessments could be generated on the same blueprint thereby allowing multiple tries. And it should reduce the cost of producing all of those short, fine-grained assessments.

Abundant Assessment

Ideally, school should be a place where students are safe to make mistakes. We generally learn more from mistakes than from successes because failure affords us the opportunity to correct misconceptions and gain knowledge whereas success merely confirms existing understanding.

Unfortunately, school isn't like that. Whether primary, secondary, or college; school tends to punish failures. At the college level, a failed assignment is generally is unchangeable and a failed class, or low grade goes on the permanent record. Consider a student that studies hard all semester, gets reasonable grades on homework, but then blows the final exam. Perhaps they were sick on exam day, or perhaps the questions were confusing and different from what they expected, or perhaps the pressure of the exam just messed them up. Their only option is to repeat the whole class — and even then their permanent record will show the class repetition.

Why is this? Why do schools amplify the consequences to such small events? It's because assessments are expensive. They cost a lot to develop, to administer, and to score. In economic terms, assessments are scarce. For schools to offer easy recovery from failure they would have to develop multiple forms for every quiz and exam. They would have to incur the cost of scoring and reporting multiple times. And they would have to select the latest score and ignore all others. To date, such options have been cost-prohibitive.

"Abundant Assessment" is the prospect making assessment inexpensive — "abundant" in economic terms. In such a framework, students would be afforded many tries until they succeed or are satisfied with their performance. Negative consequences to failure would be eliminated and the opportunity to learn from failure would be amplified.

This could be achieved by a combination of collaboration and technology. Presently, most quizzes and exams are written by teachers or professors for their class only. If their efforts were pooled into a common item bank, then you could rapidly achieve a collection large enough to generate multiple exams on each topic area. Technological solutions would provide dynamically-generated assessments (as described in the previous section), online test administration, and automated scoring. All of this would dramatically reduce the labor involved in producing, administering, scoring, and reporting exams and quizzes.

Abundant assessment dramatically changes the cost structure of a school, college, or university. When it is no longer costly to administer assessments then you can encourage students to try early and repeat if they don't achieve the desired score. Each assessment, whether an exercise, quiz, or exam can be a learning experience with students encouraged to learn quickly from errors.

Wrapup

These three frontiers are synergistic. I can imagine a student, let's call her Jane, studying in a blended learning environment. Encountering a topic with which she is already familiar, Jane jumps ahead to the topic quiz. But the questions involve concepts she hasn't yet mastered and she fails. Nevertheless, this is a learning experience. Indeed, it could be reframed as a formative assessment as she now goes back and studies the material knowing what will be demanded of her in the assessment. After studying, and working a number of the exercises, Jane returns to the topic assessment and is presented with a new quiz, equally rigorous, on the same subject. This time she passes.

Outside the frame of Jane's daily work, the data from her assessments and those of her classmates are being accumulated. When the time comes, at the end of the year, to report on school performance, the staff are able to produce reliable evidence of student and school performance without the need for day-long standardized testing.

Most importantly, throughout this experience Jane feels confident and safe. At no point is she nervous that a mistake will have any long-term consequence. Rather, she knows that she can simply persist until she understands the subject matter.